This blog post starts immediately with the topic. It’s worth reading the following articles first:

First Steps with Apache Kafka

Before we explore why the Kafka rocket can fly and how it does it, let’s ignite the engines together and take a small test flight:

Creating Topics

We want to capture all flight phases in Kafka and first create a topic for this purpose. Topics are similar to database tables in that we store a collection of data on a specific subject in topics. In our case, it’s flight data, so we name the topic accordingly flugdaten:

kafka-topics.sh \

--create \

--topic "flugdaten" \

--partitions 1 \

--replication-factor 1 \

--bootstrap-server localhost:9092

Created topic flugdaten.With the kafka-topics.sh command, we manage our topics in Kafka. Here we instruct Kafka with the --create argument to create the topic flugdaten (--topic flugdaten). Initially, we start with one partition (--partitions 1) and without replicating the data (--replication-factor 1). Finally, we specify which Kafka cluster kafka-topics.sh should connect to. In our case, we use our local cluster, which listens on port 9092 by default (--bootstrap-server localhost:9092). The command confirms the successful creation of the topic. If we get errors here, it’s often because Kafka hasn’t started yet and is therefore not reachable, or because the topic already exists.

Producing Messages

Now we have a place where we can store our data. The rocket’s onboard computer continuously sends us updates about the rocket’s flight status. For our simulation, we use the command-line tool kafka-console-producer.sh. This producer and other useful tools come directly with Kafka. The producer connects to Kafka, accepts data from the command line, and sends it as messages to a topic (configurable via the --topic parameter). Let’s write the message "Countdown started" to our newly created topic flugdaten:

echo "Countdown started" | \

kafka-console-producer.sh \

--topic flugdaten \

--bootstrap-server=localhost:9092Consuming Messages

Our ground station now wants to read this data and display it on a large screen, for example, so we can see if the rocket is really working as we expect it to. Let’s see what has happened so far. To read our recently sent message, we start the kafka-console-consumer.sh, which is also part of the Kafka family:

timeout 10 kafka-console-consumer.sh \

--topic flugdaten \

--bootstrap-server=localhost:9092

Processed a total of 0 messagesWhen we start the kafka-console-consumer.sh, it runs continuously by default until we actively stop it (for example with CTRL+C). This would also be the desired behavior if we really wanted to display the current state of the rocket somewhere. In our example, we use the timeout command so that the consumer automatically stops after at most 10 seconds. With the consumer, we must again specify which topic it should use (--topic flugdaten).

Somewhat surprisingly, no message is displayed. This is because the kafka-console-consumer.sh starts reading at the end of the topic by default and only outputs new messages. To also display already written data, we need to use the --from-beginning flag:

timeout 10 kafka-console-consumer.sh \

--topic flugdaten \

--from-beginning \

--bootstrap-server=localhost:9092

Countdown started

Processed a total of 1 messagesWhat Happened?

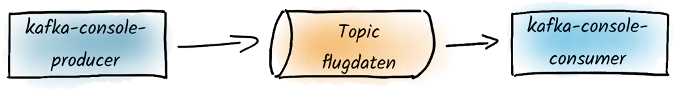

This time we see the message "Countdown started"! So what happened? With the kafka-topics.sh command, we created the topic flugdaten in Kafka, and with the kafka-console-producer.sh, we produced the message "Countdown started". We then read this message again with the kafka-console-consumer.sh. We can represent this data flow as follows:

Without further specifications, the kafka-console-consumer.sh always starts reading at the end. This means that if we want to read all messages, we need to use the --from-beginning flag.

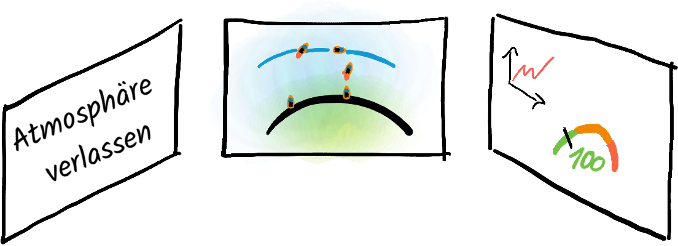

Interestingly, and unlike many messaging systems, we can read messages not just once, but as many times as we want. We can use this to connect multiple independent ground stations to the topic, for example, so that all can read the same data. Or there are different systems that all need the same data. We can imagine that we don’t just have one display screen, but also other services, such as a service that compares the flight data with current weather data and decides if action needs to be taken. Maybe we also want to analyze the data after the flight and need the historical flight data for this. We can simply run the consumer multiple times and get the same result each time.

Now we want to display the current state of the rocket in our control center, and in such a way that the display updates immediately when new data is available. To do this, we start the kafka-console-consumer.sh (without timeout) in another terminal window. As soon as new data is available, the consumer fetches it from Kafka and displays it on the command line:

# Don't forget: Use CTRL+C to stop the consumer

kafka-console-consumer.sh \

--topic flugdaten \

--bootstrap-server=localhost:9092Producing and Consuming Messages Simultaneously

To simulate the producer on the rocket side, we now start the kafka-console-producer.sh. The command doesn’t stop until we press CTRL+D and thus send the EOF signal to the producer:

# Don't forget: Use CTRL+D to stop the producer

kafka-console-producer.sh \

--topic flugdaten \

--bootstrap-server=localhost:9092The kafka-console-producer.sh sends one message to Kafka per line we write. This means we can now type messages into the terminal with the producer:

# Producer window:

echo "Countdown ended" | \

kafka-console-producer.sh \

--topic flugdaten \

--bootstrap-server=localhost:9092

> Countdown ended

> Liftoff

> Left atmosphere

> Preparing reentry

> Reentry successful

> Landing successfulWe should see these appear promptly in the window with the consumer:

# Consumer window:

Countdown ended

Liftoff

Left atmosphere

Preparing reentry

Reentry successful

Landing successfulLet’s imagine that part of our ground crew is working from home and wants to follow the flight from there. To do this, they start their consumer independently. We can simulate this by starting a kafka-console-consumer.sh in another terminal window that displays all data from the beginning:

# Window Consumer 2:

kafka-console-consumer.sh \

--topic flugdaten \

--from-beginning \

--bootstrap-server=localhost:9092

Countdown started

Countdown ended

Liftoff

Left atmosphere

Preparing reentry

Reentry successful

Landing successfulWe see here that data that is written once can be read in parallel by multiple consumers without the consumers having to talk to each other or register with Kafka beforehand. Kafka doesn’t delete any data initially, which means we can also start a consumer later that can read historical data.

Let’s say we want to fly multiple rockets simultaneously. Kafka has no problem with this and can process data from many producers simultaneously without any issues. So let’s start another producer in another terminal window:

# Producer for Rocket 2:

kafka-console-producer.sh \

--topic flugdaten \

--bootstrap-server=localhost:9092

> Countdown startedWe see all messages from all producers appearing in all our consumers in the order in which the messages were produced:

# Window Consumer 1:

[…]

Landing successful

Countdown started

# Window Consumer 2:

[…]

Landing successful

Countdown startedNow we have the problem that we can’t distinguish between messages from Rocket 1 and 2. We could potentially write into each message which rocket sent the message. More on that later.

Before we continue, we should abort the flight of our second rocket since we want to launch it later:

# Producer for Rocket 2:

[…]

> Countdown abortedSummary

We have now successfully launched a rocket on a test basis and landed it again. In doing so, we wrote some data to Kafka. To do this, we first created a topic flugdaten with the command-line tool kafka-topics.sh, into which we write all flight data for our rocket. We produced some data into this topic using kafka-console-producer.sh. In our case, these were pieces of information about the current status of the rocket. We could read and display this data using the kafka-console-consumer.sh. We even went further and produced data in parallel with multiple producers and read data simultaneously with multiple consumers. With the --from-beginning flag in the kafka-console-consumer.sh, we were able to access historical data. We have thus already learned about three command-line tools that come with Kafka.

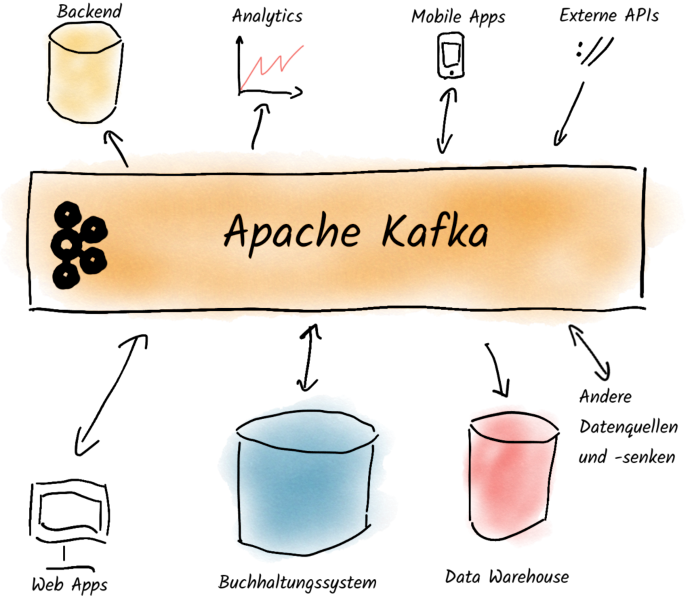

After gathering these experiences, we can now close all open terminals. We end producers with CTRL+D and consumers with CTRL+C. This example should not hide the fact that Kafka is used everywhere where larger amounts of data are processed. From our training experience, we know that Kafka is intensively used by many car manufacturers, supermarket chains, logistics service providers, and even in many banks and insurance companies.

In this blog post, we got a first overview of Kafka and worked through a simple test flight scenario. In our book, we continue from this point by diving deeper into the subject and examining the Kafka architecture more closely. We will look at how Kafka messages are structured and how exactly they are organized in topics. In this context, we will also deal with Kafka’s scalability and reliability. We will also learn more about producers, consumers, and the Kafka cluster itself.

Continue reading

Setting up a Kafka Test Environment

Learn how to set up a multi-node Kafka test environment on a single machine for development and testing purposes. This guide shows you how to configure three Kafka brokers with proper controller setup.

Read more

What is Apache Kafka?

What is Apache Kafka all about? This program that virtually every major (German) car manufacturer uses? This software that has allowed us to travel across Europe with our training sessions and workshops and get to know many different industries - from banks, insurance companies, logistics service providers, internet startups, retail chains to law enforcement agencies? Why do so many different companies use Apache Kafka?

Read more