How many partitions do I need in Apache Kafka?

Determining the right number of partitions is a fundamental aspect of Apache Kafka system design. It’s a decision best made during the initial setup, as adding partitions later during live operations requires considerable effort and introduces risk. How many partitions should you configure from the beginning? This is the quintessential question I hear repeatedly in my training courses. I’ll be upfront: this article won’t give you a fixed answer, but it will provide the necessary information for you to make an informed decision based on your specific use case.

Why have partitions in Apache Kafka at all?

In Kafka, we use partitions to accelerate the processing of large amounts of data. Instead of writing all our data from a topic into one partition (and thus one broker), we divide our topics into different partitions and distribute these partitions across our brokers. Unlike other messaging systems, our producers are responsible for deciding which partition our messages are written to. If we use keys, the producer distributes the data so that data with the same key ends up on the same partition. This ensures that the order between messages with the same key is correctly maintained. If we don’t use keys, then messages are distributed to the partitions using a round-robin method.

Furthermore, we can meaningfully have at most as many consumers in a consumer group as we have partitions in our topics. Often the bottleneck in data processing is not the broker or the producers, but the consumers who not only have to read the data but also process it further.

Generally speaking: the more partitions, the

-

higher our data throughput: Both the brokers and the producers can process different partitions completely independently – and thus in parallel. This allows these systems to better utilize available resources and process more messages. Important: The number of partitions can be significantly higher than the number of brokers. This is not a problem.

-

more consumers we can have in our consumer groups: This potentially also increases the data throughput, as we can distribute the work across more shoulders. But caution: The more individual systems we have, the more parts can fail and cause problems.

-

more open file handles we have on the brokers: Kafka opens two files for each segment of a partition: the log and the index. While this hardly affects performance, we should definitely increase the allowed number of open files on the operating system side.

-

longer downtimes occur: If a Kafka broker is shut down cleanly, it unregisters itself from the controller, and the controller can move the partition leaders to other brokers without downtime. However, if a broker fails uncontrollably, there may be longer downtimes as there is no leader for a large number of partitions. Due to limitations in Zookeeper, the consumer can only move one leader at a time. This takes about 10 ms. With thousands of leaders that need to be moved, this can take minutes in some circumstances. If the controller fails, it must read in the leaders of all partitions. If this takes about 2 ms per leader, the process takes even longer. With KRaft, this problem will become much smaller.

-

the clients consume more RAM: The clients create buffers per partition, and if a client interacts with many partitions, possibly distributed across many topics (especially as a producer), then the RAM consumption adds up significantly.

Limits on partitions

There are no hard limits on the number of partitions in Kafka clusters. But here are some general rules for Zookeeper-based clusters. With KRaft these limits do not apply:

-

maximum of 4000 partitions per broker (in total; usually distributed across many topics)

-

maximum of 200,000 partitions per Kafka cluster (in total; usually distributed across many topics)

-

consequently: maximum of 50 brokers per Kafka cluster

This reduces downtime if something does go wrong. But caution: It shouldn’t be your goal to push these limits. In many "Medium Data" applications, you don’t need any of this.

Rules of thumb

As described at the beginning, there is no "right" answer to the question of how many partitions. Over time, however, the following rules of thumb have emerged:

-

No prime numbers: Even though many examples on the internet (or in training) use three partitions, it’s often a bad idea to use prime numbers, as prime numbers are difficult to divide across different numbers of brokers and consumers.

-

Easily divisible numbers: Therefore, numbers that can be divided by many other numbers should always be used.

-

Multiple of consumers: This allows partitions to be evenly distributed across the consumers of a consumer group.

-

Multiple of brokers: Unless we have a very large number of brokers, this allows us to distribute partitions (and leaders!) evenly across all brokers.

-

Consistency in the Kafka cluster: At the latest when we want to use Kafka Streams, we realize that it makes sense not to have wild growth in partitions. For example, if we want to make a join over two topics and these two topics do not have the same number of partitions, Kafka Streams must repartition one topic beforehand. This is a costly affair that we want to avoid if possible.

-

Dependent on performance measurements: If we know our target data throughput and also know the measured data throughput of our consumers and producers per partition, we can calculate how many partitions we need. For example, if we know we want to move 100 MB/s of data and can achieve 10 MB/s per partition in the producer and 20 MB/s in the consumer, we need at least 10 partitions (and at least 5 consumers in the consumer group).

-

Don’t overdo it: This is not a competition to set up the largest possible number of partitions. If you only process a few tens of thousands of messages per day, you don’t need hundreds of partitions.

Examples from practice

In my consulting practice, 12 has emerged as a good benchmark for the number of partitions. For clients who process very little data with Kafka (or have to pay per partition), even smaller numbers can make sense (e.g., 2, 4, 6). If you process large amounts of data, then Pere’s Excel spreadsheet, which he has made available on GitHub, helps: kafka-cluster-size-calculator

Further information:

-

Confluent Blog: How to choose the number of topics/partitions in a Kafka cluster?

-

Apache Kafka in Action pages 84: "How many partitions should we have"

Continue reading

Kafka: How to Produce Data Reliably

Want to ensure that not a single Kafka message gets lost? In this guide, I'll show you how to achieve maximum reliability when producing with the right configurations and what to do in case of errors.

Read more

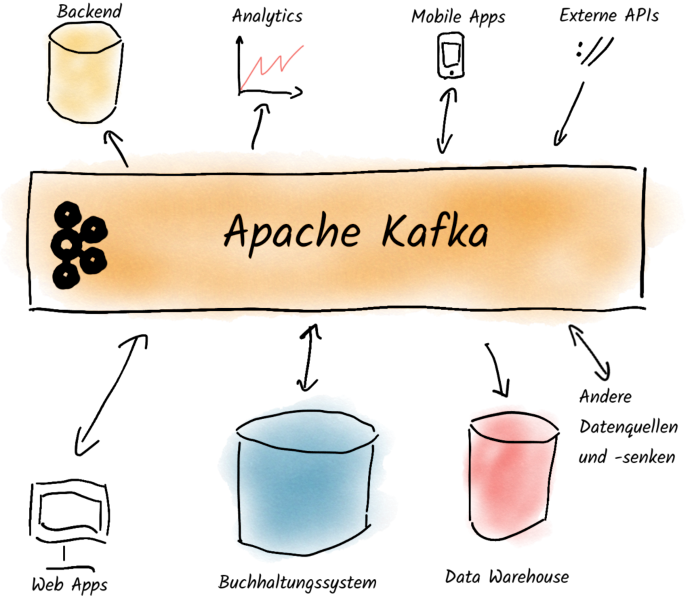

What is Apache Kafka?

What is Apache Kafka all about? This program that virtually every major (German) car manufacturer uses? This software that has allowed us to travel across Europe with our training sessions and workshops and get to know many different industries - from banks, insurance companies, logistics service providers, internet startups, retail chains to law enforcement agencies? Why do so many different companies use Apache Kafka?

Read more