Kafka in Banking: A Bridge Between Worlds – for Long-term Economical Projects

Some time ago, a report by Süddeutsche Zeitung made headlines. It was about a group of NASA retirees who, in 2014, 37 years after the launch of the world-famous Voyager missions, were still maintaining the space probes. The reason? These old veterans are among the few who still fully understand the engineering of that era.

The case in Houston sounds romantic, but it highlights a problem: The expertise of "all-knowing" individuals becomes a threatening scenario when these experts retire from professional life.

In theory, there could also be one or more groups of seasoned veterans in the banking system. However, this is anything but amusing.

Many banks still use various mainframes to handle their core business. The software for these massive computers was written decades ago. Many of the development teams from that time have long since retired or moved to other companies.

Modifying these systems is considered extremely difficult because there are hardly any people left who are really familiar with these archaic structures and programming languages like COBOL. A lot of knowledge went into the development that has since been forgotten outside of the program code.

Simply throwing away these systems is also not an option: too many current and vital processes depend on them.

Solution Approach: "Liberating" Data from Mainframes through Apache Kafka

An approach that many banks want to use to solve this dilemma is the "liberation" of data from their mainframes. These systems must and should continue to exist. The only difference: authorized individuals can access the information at any time. This way, banks can continue to meet the requirements of the banking world in the future. Apache Kafka is one of the most effective solutions for this connection between the past and the modern.

For example, some of my clients from the financial sector are in the process of introducing new, user-friendly frontends for their banking customers and employees. I’m talking about apps or improved websites. Banks often proceed as follows:

-

First, they need to transfer the data from the mainframe to Kafka. This is not trivial! Extracting data from core banking systems is fraught with great difficulties. The old systems were not designed to output the diverse information in the quantities needed today when they were initially integrated. Banks are forced to make a decision. They can develop individual solutions or use existing connectors as interfaces. Both solutions require considerable development effort. The good news: Modern systems have a direct connection to Kafka.

-

Then the data must be enriched and transformed. This is best done in real-time. Only then can the data generated in the mainframe reach other systems as quickly as possible. Stream processing frameworks like Kafka Streams or Apache Flink are ideal for this.

-

For many of these transformations, we can even avoid complex code and instead rely on SQL, for example, based on Apache Flink.

-

But now it gets really exciting and expensive: A normal-sized bank has millions of customer records. Even if each consists of only 1000 account transactions, a low estimate, that’s many billions of data points. Loading this data from the mainframe costs a lot of money. Especially since it should be done quickly.

But there’s good news: Apache Kafka doesn’t forget. Banks can’t avoid this expensive process. But with Kafka, they ensure it remains a one-time effort.

Even if months or years later, a new application is added and original mainframe data is needed again, the information is already available. The new application can get this directly from Kafka. After a (preferably agile) approval process, the data is immediately ready. Customers can access information within days instead of months. This is what I mean when I talk about "liberating" data.

Summary: What Kafka Brings to Banks

With Kafka, banks "liberate" data from mainframes and core banking systems. They can distribute information within the company more flexibly and according to needs. Core banking systems no longer need to be directly accessed by every new app. This significantly reduces costs and accelerates product development. Teams can access the required data in real-time and much more easily than before.

Apache Kafka is thus not only an instrument with which we can efficiently retrieve data from old systems at any time. Kafka also ensures that the information remains on the surface for the long term with quick access. Even when a project is supposedly completed.

This way, smart companies reduce the costs for numerous further and new developments with a one-time investment.

This blog will soon be expanded with a case interview from the industry. Don’t want to miss it? Follow me on LinkedIn!

Continue reading

Kafka in Automotive: The Solution for Exponentially Growing Data Traffic?

Modern vehicles produce enormous amounts of data. This challenges not only mobile networks but also the IT systems of manufacturers. How can Apache Kafka help?

Read more

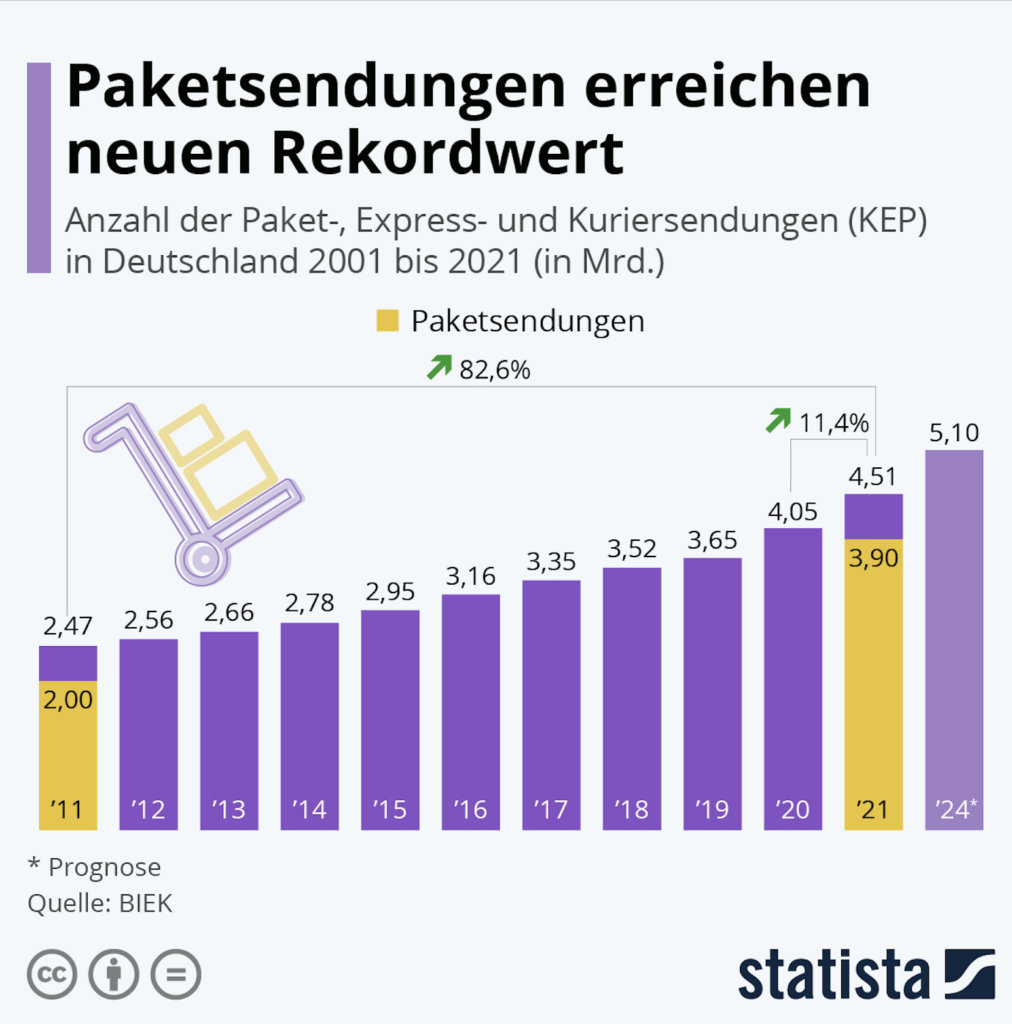

Logistics: How the Industry Can Withstand Growing Pressures with Kafka

Growing package volumes. Porous supply chains. Logistics companies face major challenges. They need to make the right decisions in real-time more than ever. However, this can't be achieved without real-time data. How Apache Kafka and its ecosystem help companies achieve this.

Read more