More articles in this series

Why Apache Kafka?

Let’s talk about a problem that almost certainly affects you.

Batch processing is still the standard in too many companies. And this despite the fact that it cannot fulfill the desire for immediate access to information.

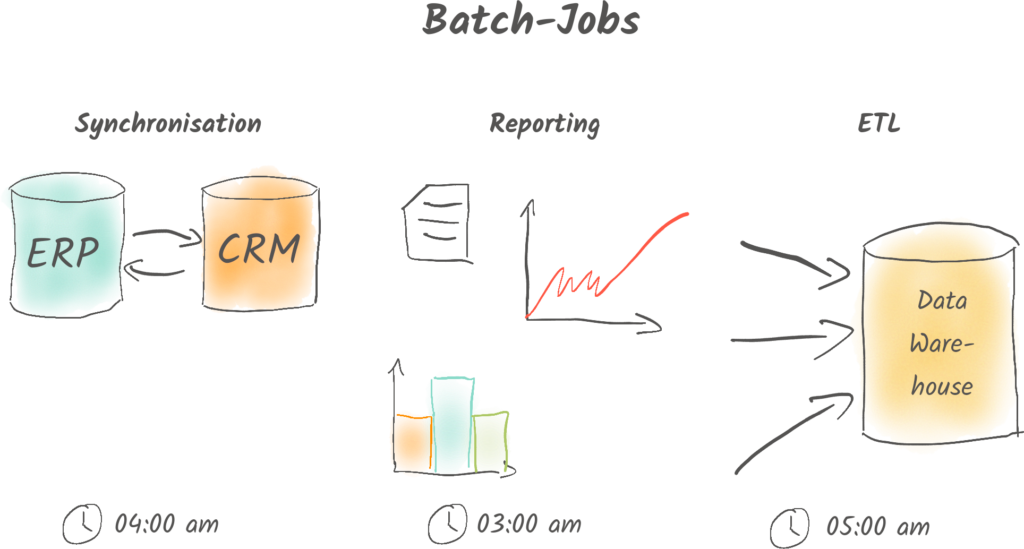

In 2023, the following is still the norm in many companies: Right on schedule at night, batch systems begin their work. What do they do?

They synchronize data. Prepare reports about the previous day. Fill the organization’s internal data warehouse. Note: with yesterday’s data. It’s like a baker reheating old bread.

Don’t get me wrong: I’m not demonizing this approach on principle. Batch systems have their advantages.

-

They use inexpensive computing capacity at night. This reduces server load when customers access systems.

-

They can efficiently process large amounts of data when these are known in advance.

-

They are perfect for cyclical processing procedures. Especially when it comes to payroll, taxes, and similar processes.

But there is a but. Small hint: It has to do with a song by Queen.

I want it all. And I want it now.

Queen

A large portion of customers want everything – and they want it RIGHT NOW. If you’re not completely out of the ordinary, you should know this feeling. That’s just how people tick.

However, batch systems always involve a delay. The "now" doesn’t exist with them. This is unfavorable in a dynamic world that, no joke, even physically rotated faster in 2022.

Yesterday’s data is usually dusty by the next morning. I wonder:

-

Why don’t market leaders even suspect how much shampoo is ACTUALLY on the shelf RIGHT NOW?

-

Why don’t those responsible in the package distribution center know how many packages will arrive in the next hour?

-

And why are there still banks that make us wait weeks before telling us how much money the purchase via credit card abroad cost?

Batch systems are reliable, but at the same time, in many areas, they are just as reliably a roadblock.

How to do better?

With Apache Kafka.

With this software, companies can finally arrive in the 21st century and access the most important data “live.”

Welcome to the world of real-time data processing!

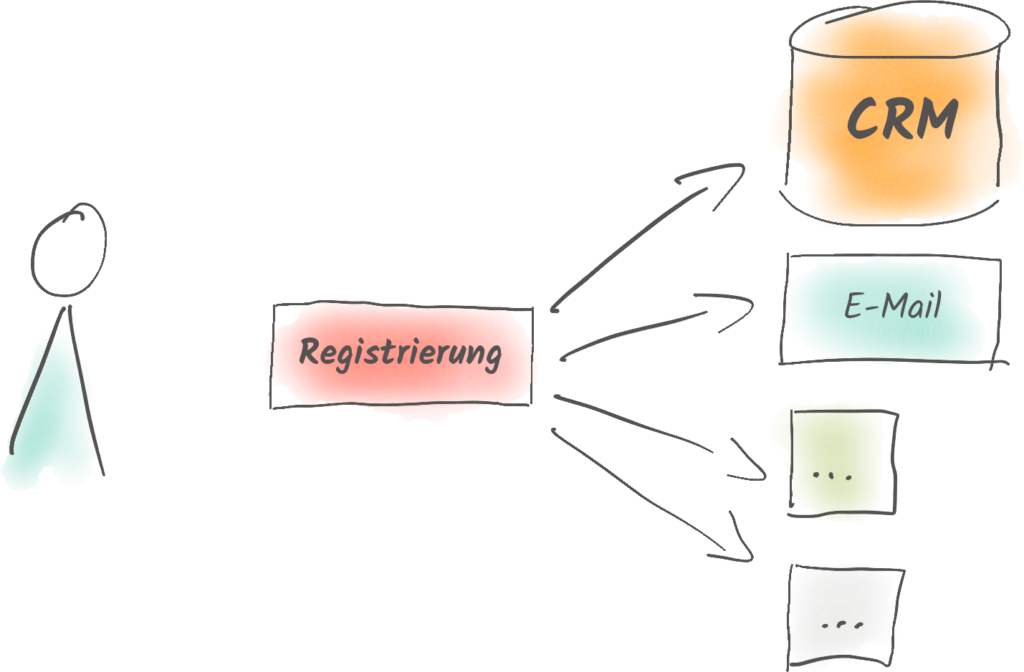

Instead of IT systems keeping data to themselves and only spitting it out when asked (for example at 4 AM when the batch job starts), IT systems should publish events – in other words, data – immediately. Ideally, machine-sorted and curated.

What does this bring? Initially, cost savings and competitive advantages. But that’s not all:

-

Different systems are instantly connected. The CRM (Customer Relationship Management program) can automatically forward the new contact to the software that sends the welcome email. Not only do people share their knowledge faster, but especially programs.

-

Management gets a real-time overview of what is currently happening in the company.

-

You can make decisions as soon as it’s necessary. Not just tomorrow.

Apache Kafka makes all this possible — if you can master this complex system. How this works in microservice architectures, you’ll soon read about on my blog.

Continue reading

Outlook on the Data Mesh: 4 Steps for the Paradigm Shift

The "Data Mesh" is a real hype in the IT. Why companies benefit from a decentralised data architecture and how Apache Kafka helps establish this new structure is discussed in this article.

Read more

Data in a Microservice World

From start-up to corporation – more and more companies are adopting microservice architectures. In this post, you'll learn how companies use Apache Kafka to simplify communication between their services.

Read more